The Number of All Possible Meaningful or Discernible Pictures

Theo Pavlidis, © 2009

New Draft, May 6, 2009

Link to Old Draft

One of the challenges in statistical pattern recognition is to select representative sets of images to train and test an algorithm. A similar challenge occurs in content-based image retrieval where one must develop a measure of image similarity. Again there is a need for representative image sets for training and learning. Quite often authors claim (explicitly or implicitly) that the set they use is representative because of the large number of images it contains, usually tens of thousands or even several million of images.

The purpose of this paper is to show that using even millions of images is no guarantee for having a representative set because the number of all possible images is in the trillions. Unless one can specify characteristics of the set of images of interest (for example, images of numerical digits), sheer numbers do not guarantee that a set is representative of all images.

At first sight, the enormity of the number of all possible images seems obvious. For example, consider 32 by 32 images with 3 bits per pixel (one bit per color). The number of possible images of such size is 23072or about 10300, a truly “astronomical" number. The trouble with that argument is that most of these images do not represent scenes that could possibly have been captured by a camera. Therefore we must ask a much harder question.

What is the number of all possible pictures that may represent a scene? In other words, pictures that will represent not just a collection of random pixels but a collection of pixels that, at least, some human observers will interpreted as representing something that could have been captured by a camera. I used for brevity the term meaningful pictures even though the word "meaning" depends a lot on the observer. (Others may prefer the term valid pictures.)

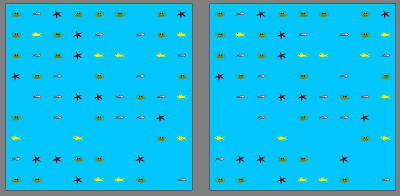

I will try to answer this question by providing a lower bound. Suppose I subdivide an image in K-by-K square blocks and paint each block with one of a small set of specific images. Figure 1 shows an example where K equals 9 Two images are shown side by side and they differ in only one block. Each block may be either empty or contain an image of a sea creature. The block size is 60 by 60, so the size of each image is 540 by 540. Each image is meaningful (valid) to a human observer (although people may have trouble finding the block where the two images differ). How many such images exist? Because each block can have one of five configurations and there are 81 such blocks the answer is 581 > 1056 . (A trillion is 1012.)The number 1056 is only a lower bound on the number of all possible valid images. By selecting K equal to 20 and the same block size we would have a 1200 by 1200 pixel image and we could probably accommodate 10 choices for each block, so the number of such images would be 10400 and we could go even higher. And that would still leave many images out.

|

| FIGURE 1: Two images consisting of 9x9 blocks and differing in only one block. Click on the images to see them full size. |

Estimate No. 1: 1056 is a very conservative lower bound to the number of all possible meaningful/valid images. The number of meaningful/valid images is at least as high as 10400.

An even harder question is to ask how such images will be discernible by humans. For example, there may be several images of foliage but most people could not tell them apart. This question can be farther refined by distinguishing between pairs of pictures that are seen as different when displayed side by side or pairs that can be differentiated from memory. In this note I will consider only the first case. I wrote a program that uses a random number generator to create K-by-K boards and then (randomly) changes the images of one of the squares and displays both boards side-by-side. Click here for software that consists of an executable module as well as the source code (as a zipped Microsoft Visual Studio project). Figure 1 was produced by this program. The core of the program is quite simple. For the case where only two images are allowed per block the code is

// CORE CODE // mat[] is an integer array for(int i=0; i<K*K; i++) mat[i] = rand()%2; // display the matrix mat[] in K by K form int flip = rand()%(K*K); mat[flip] = 1 - mat[flip]; // display the matrix mat[] in K by K form in a new position

The complete program allows for timing of the user reaction and readers are invited to experiment. For K equal to six the time is in the order of a few seconds, from about 4 secs for two configurations to about 6 secs for five configurations. (I have asked several people to do the task from printouts of images and they all did it within a few seconds.) Thus 536 seems to be a lower bound on the number of discernible images. That exceeds 1025 , which is greater than a trillion squared. If we allow observers to spend over 10 seconds perusing a pair of images then find differences for higher values of K. (Even for K equal to 9, as in Figure 1, it takes less than 30 seconds.)

Estimate No. 2: 1025 (greater than a trillion squared) is a very conservative lower bound to the number of all possible discernible images.

The ability of humans to differentiate images on the basis of small details, even when recalled from memory, has been documented recently by Brady et al, 2008, therefore Estimate No. 2 of lower bounds is not that surprising.

The huge number of possible images raises several questions about pixel based vision both from computational and neuroscience viewpoints. A recent editorial in Nature by Mazer (2008) discuss the issues from the viewpoint of neuroscience pointing out to the existence of areas in the brain that respond to structures rather than pixels. (Additional references can be found in that editorial.)

Some researchers in machine vision dismiss the issue by arguing that humans lump large numbers of images into a small number of categories, but that misses an important point. Algorithms for image classification or retrieval perform their computations on the pixel space and one must prove that all these calculations provide similar results on widely differing pixels sets that correspond to the same category. The wide disparity in pixels values of “similar” images (and conversely pixel-wide similarities on “different” images) present obstacles in extending laboratory results to practice (Pavlidis 2008).

Other computer vision authors have focus on the challenges posed by the ability of the human visual system to process very large amounts of information and its implication on learning mechanisms. It is beyond the scope of this short paper to provide a review of all such efforts but I want to point out one of the earlier papers by Tsotsos (1987). While an explicit number of all possible images is not the focus of that paper, the parameters discussed in it point to an estimate of 105400, much higher than the number given in (1).

Restricting the classes of images to be dealt simplifies, but it does not eliminate the problem. The main benefit from class restriction is the possibility to model the formation of images and therefore use synthetic data. This has been done succesfully in the area of document analysis where some researchers have been relying on synthetic data to augment the size of the data sets used to train and test classifiers (Baird 2006). In a recent paper Nonnemaker and Baird (2009) have used the typeface description model of Metafont and an image degradation model to show that properly constructed sythetic data are not only safe to use but also improve performance. The paper by Jin and Geman (2006) presents a novel approach in dealing with the high dimensionality of the image space by relying on context at several levels.

In many ways the synthetic data approach is the simplest to implement. For example, when dealing with image retrieval problems the "learning" and "testting" sets should be augmented by images obtained from the given by simulating different camera settings and lighting conditions or bt applying image enhancement methods. Such trasnformations are simple to implement and can increase the size of the tests by at least a factor of 10.

Clearly, more systematic research could establish tighter bounds than those given here, but such results may be more important for psychology or neuroscience than technology. Whether the number of all possible images is 1030 or 103000 may be of interest to pure science, but either number implies that it is impossible to conduct research that relies on "knowing" all possible images.

Acknowledgements: I want to thank Prof. G. Zelinsky (Dept. of Psychology, Stony Brook University) for helpful comments on an earlier draft and for pointing out Brady et al, 2008.

Literature Cited

Baird, H. S. 2006 "The State of the Art of Document Degradation Modeling" in Digital Document Processing: Major Directions and Recent Advances, B. B. Chaudhuri (ed.), Advances in Pattern Recognition series, Springer.

Brady, T. F., T. Konkle, G. A. Alvarez, and A. Oliva, 2008 "Visual long-term memory has a massive storage capacity for object details", PNAS, vol. 105, No. 38.

Jin, Y. and S. Geman, 2006 "Context and Hierarchy in a Probabilistic Image Model", CVPR

Mazer, J. A. 2008 "So many pixels, so little time" Nature Neuroscience, 11 (Nov. 2008), pp. 1243-1244.

Nonnemaker, J. and H. S. Baird, 2009, ``Using Synthetic Data Safely in Classification," Proc., IS&T/SPIE Conf. on Document Recognition and Retrieval (DRR 2009), San Jose, CA, January 28 - February 1, 2009.

Pavlidis, T. 2008 "Limitations of Content-based Image Retrieval", invited plenary talk at the 19th International Conference on Pattern Recognition, Tampa, Florida, Dec. 8-11, 2008. Slides.

Tsotsos, J. K. 1987, "A 'Complexity Level' Analysis of Vision" ICCV.

Back to CBIR Index — Back to Technical Index — Link to Software